The blog is based on real world issues that I have seen over the past few years.

SQL server is so easy to setup and cluster that anyone with good knowledge of general infrastructure can do this. This is good but can lead to incorrect configuration. How many people tweak MAXOP ? do you lock pages in memory? do you create one tempdb per CPU core? A good DBA will know what all these questions mean. Also quite rightly most people put LOGS and DATA on their own drive. But for high transactional database have you thought about tempdb and snapshot isolation? Far too much to go over in this blog.

Lets assume the following has happened; You have a hosting requirement for CRM (I picked CRM as at the moment , I ‘do a lot’ of CRM). So your CRM Architect sizes the servers as :

Your infrastructure team build this and put in the right firewalls etc. Now comes the monitoring. The SQL server has a the following drives :

| OS | 120GB | C: |

| DATA | 120GB | D: |

| LOGS | 60GB | L: |

On some days CRM’s ReIndex job starts and the LOG file gets very large. Your disk monitoring software detects the low disk space. Someone logs in to the database server looks at the log drive and see that it has low disk space. So… they open google and typing “Log file large SQL” and if you follow the first article without reading in correctly you may find yourself ready to enter something like :

DBCC SHRINKFILE (’CRMORG_Log’, 1000)

Lets assume that the backup has run so the log file has lots of free space.

You look at free space on disk and wow.. you have fixed it. The next day comes and the first few CRM transactions need to grow the log. In other words when a user updates a record in CRM it could trigger a transaction that has the side affect of ‘growing’ the log. This will take time and other transaction may have to queue up behind.

This performance issue is noted so you contact your DBA. The DBA may run a script like :

SELECT databasename,e.name AS eventname,cat.name AS [CategoryName],starttime,e.category_id,This will show that the log file has been auto growing. The DBA may then pre-size the log to fix this.

loginname,loginsid,spid,hostname,applicationname,servername,textdata,objectname,eventclass,

eventsubclass

FROM ::fn_trace_gettable(@path, 0)

INNER JOIN sys.trace_events e

ON eventclass = trace_event_id

INNER JOIN sys.trace_categories AS cat

ON e.category_id = cat.category_id

WHERE e.name IN( 'Data File Auto Grow', 'Log File Auto Grow' )

ORDER BY starttime DESC

Now… we are back to the start and you run low on disk space. To be honest the DBA may not get involved and you may not pre-size the log.

What should we do?

Firstly, you need to understand the role of a DBA. The ability to install SQL and create a database does not mean you are a DBA. No offence meant its just that Microsoft have made SQL so easy to manage that people don’t hire a DBA and that's a key issue. You should at least ‘get one in’ for a day or two. I have visited many customers and sat with their DBA and pointed out many issues with the health of their SQL server.If the databases have been sized correctly. Based on; Number of users, User Transactions, Backlog e.g. data load, data type e.g. any blobs? and Backup Strategy (You need to understand the backup strategy to size the logs).

We have this disk layout

| OS | 120GB | C: |

| DATA | 120GB | D: |

| LOGS | 60GB | L: |

DBCC SQLPERF(LOGSPACE)

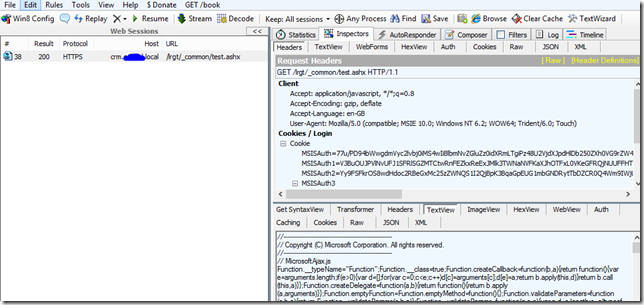

You will need a monitoring tool capably of view the output of SQL statements.

What else?

I intend to write more blogs on this subject as I come across issues.I have also seen systems that monitor backups but they don't monitor the SQL backups. In simple terms you have a SQL jobs that BACKUPS a DATABASE to DISK. You have a backup tool that backs up the BACKUP from DISK to TAPE. You monitor the backup tool but what if the SQL backup jobs have been failing? who is looking at that? This would also cause large transaction logs.